Blind people rely mostly on screen readers to consume digital information, relying on sequential auditory feedback, which impairs a quick overview of the content.

We propose taking advantage of the Cocktail Party Effect, which states that people are able to focus their attention on a single voice among several conversations. In fact, research has shown that concurrent speech is able to speed-up the consumption of digital information. In this project, we are investigating novel interactive applications that leverage the use of concurrent speech to improve users’ experiences with their devices.

Project Details

Title: Applications for Concurrent Speech

Date: Jan 1, 2017

Authors: João Guerreiro, Hugo Nicolau, Tiago Guerreiro, Kyle Montague

Keywords: concurrent speech, 3d audio, blind, touchscren

Related Publications

- Collaborative Tabletops for Blind People: The Effect of Auditory Design on Workspace Awareness Best Paper Award

- Daniel Mendes, Sofia Reis, João Guerreiro, and Hugo Nicolau. 2020. Collaborative Tabletops for Blind People: The Effect of Auditory Design on Workspace Awareness. Proceedings of the 2020 International Conference on Interactive Surfaces & Spaces. http://doi.org/10.1145/3427325

- [ABSTRACT] [PDF] [LIBRARY]

Interactive tabletops offer unique collaborative features, particularly their size, geometry, orientation and, more importantly, the ability to support multi-user interaction. Although previous efforts were made to make interactive tabletops accessible to blind people, the potential to use them in collaborative activities remains unexplored. In this paper, we present the design and implementation of a multi-user auditory display for interactive tabletops, supporting three feedback modes that vary on how much information about the partners’ actions is conveyed. We conducted a user study with ten blind people to assess the effect of feedback modes on workspace awareness and task performance. Furthermore, we analyze the type of awareness information exchanged and the emergent collaboration strategies. Finally, we provide implications for the design of future tabletop collaborative tools for blind users.

- Towards Inviscid Text-Entry for Blind People through Non-Visual Word Prediction Interfaces.

- Kyle Montague, João Guerreiro, Hugo Nicolau, Tiago Guerreiro, André Rodrigues, and Daniel Gonçalves. 2016. Towards Inviscid Text-Entry for Blind People through Non-Visual Word Prediction Interfaces. Inviscid Text-Entry and Beyond Workshop at Conference on Human Factors in Computing Systems (CHI).

- [ABSTRACT] [PDF]

Word prediction can significantly improve text-entry rates on mobile touchscreen devices. However, these interactions are inherently visual and require constant scanning for new word predictions to actually take advantage of the suggestions. In this paper, we discuss the design space for non-visual word prediction interfaces and finally present Shout-out Suggestions, a novel interface to provide non-visual access to word predictions on existing mobile devices.

- Blind People Interacting with Large Touch Surfaces: Strategies for One-handed and Two-handed Exploration Best Paper Award

- Tiago Guerreiro, Kyle Montague, João Guerreiro, Rafael Nunes, Hugo Nicolau, and Daniel J.V. Gonçalves. 2015. Blind People Interacting with Large Touch Surfaces: Strategies for One-handed and Two-handed Exploration. Proceedings of the 2015 International Conference on Interactive Tabletops & Surfaces, ACM, 25–34. http://doi.org/10.1145/2817721.2817743

- [ABSTRACT] [PDF] [LIBRARY]

Interaction with large touch surfaces is still a relatively infant domain, particularly when looking at the accessibility solutions offered to blind users. Their smaller mobile counterparts are shipped with built-in accessibility features, enabling non-visual exploration of linearized screen content. However, it is unknown how well these solutions perform in large interactive surfaces that use more complex spatial content layouts. We report on a user study with 14 blind participants performing common touchscreen interactions using one and two-hand exploration. We investigate the exploration strategies applied by blind users when interacting with a tabletop. We identified six basic strategies that were commonly adopted and should be considered in future designs. We finish with implications for the design of accessible large touch interfaces.

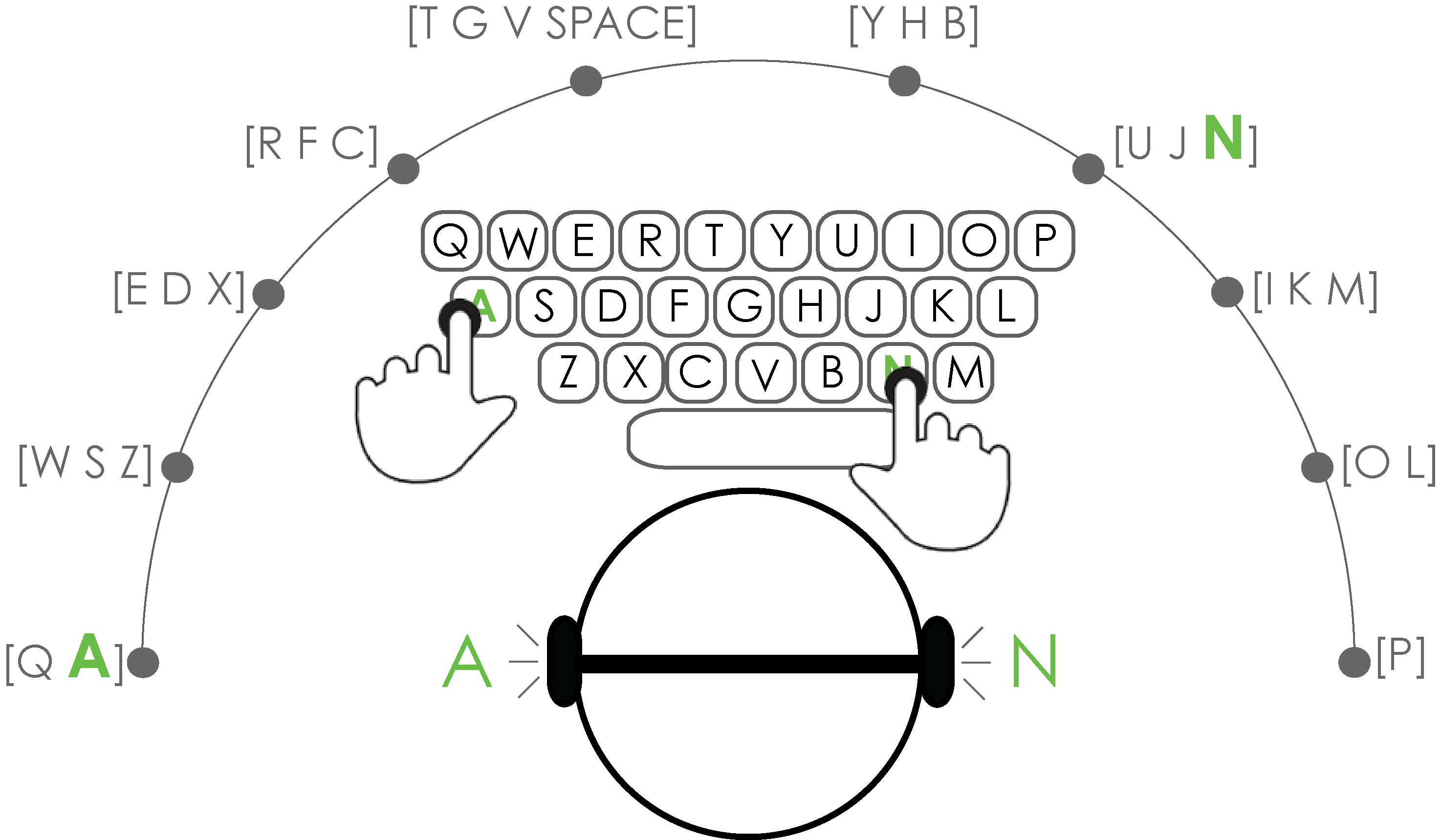

- TabLETS Get Physical: Non-Visual Text Entry on Tablet Devices

- João Guerreiro, André Rodrigues, Kyle Montague, Tiago Guerreiro, Hugo Nicolau, and Daniel Gonçalves. 2015. TabLETS Get Physical: Non-Visual Text Entry on Tablet Devices. Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, ACM, 39–42. http://doi.org/10.1145/2702123.2702373

- [ABSTRACT] [PDF] [LIBRARY]

Tablet devices can display full-size QWERTY keyboards similar to the physical ones. Yet, the lack of tactile feedback and the inability to rest the fingers on the home keys result in a highly demanding and slow exploration task for blind users. We present SpatialTouch, an input system that leverages previous experience with physical QWERTY keyboards, by supporting two-handed interaction through multitouch exploration and spatial, simultaneous audio feedback. We conducted a user study, with 30 novice touchscreen participants entering text under one of two conditions: (1) SpatialTouch or (2) mainstream accessibility method Explore by Touch. We show that SpatialTouch enables blind users to leverage previous experience as they do a better use of home keys and perform more efficient exploration paths. Results suggest that although SpatialTouch did not result in faster input rates overall, it was indeed able to leverage previous QWERTY experience in contrast to Explore by Touch.